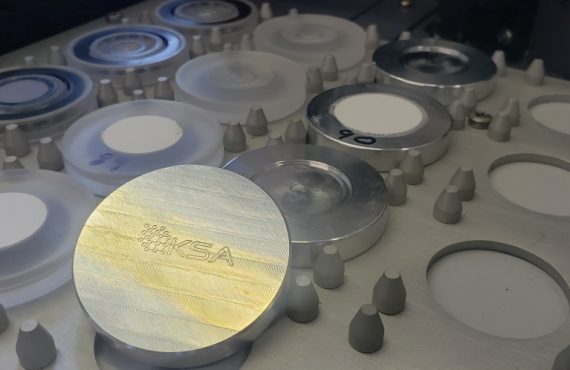

We’ve been experimenting with better ways to quantify the quality of XRD tubes in the shop. We use these tests on new and used tubes to monitor performance in two key areas. 1) Intensity 2) Spectral purity.

What we’ve settled on is a test that involves a wavelength-dispersive approach which gives us a lot of intensity to work with while eliminating background scatter and fluorescence effects. Basically, we’re able to extract more information from the data because the “noise” is almost zero.

We used Jade Pro to evaluate the scans, but they’re not D-spacing vs intensity as one would normally expect. This scan represents Wavelength vs intensity more like one would see in a WDXRF spectrometer. Cu KA1 and 2 are obvious, as is Cu KB1. Many of the current generation of XRD users have never seen a W LA1 peak in their data, but it’s clearly visible here as this is an older tube. What I’ve never been able to see before is the W La2 peak in the green scan. You’re looking at a peak that is ~62eV separated from W La1. No XRD detector on the market has energy resolution like that so these would always be lumped together so you’d see a series of additional peaks from every d-spacing in the sample in the diffractogram. Only a handful of detectors (our SDD-150 for example) could even separate the W La from the Cu K lines. That’s the power of wavelength-dispersive techniques. Incidentally, the most common device for cleaning up superfluous energy emissions in XRD data is a diffracted-beam monochromator and they eliminate all the W La through a secondary diffraction event much like what we’re doing.

Characterizing emissions is nothing new. In fact, I started wanting to improve this after listening to a talk at DXC about Jim Cline’s famous XRD system which is used at NIST to perform the primary data collection on the CRMs we all use. To paraphrase Sir Arthur Conan Doyle, “When you explain every extraneous data point, the remaining information is the pure truth of the sample”.